What Is Data Quality Management? 5 Key Pillars to Know

Introduction to Data Quality Management

Collecting, organizing, and storing data is only half the battle of data management. Information left in databases can deteriorate over time, losing accuracy and value. To avoid this, many companies practice data quality management to preserve information and maintain compliance with data quality standards.

What is Data Quality Management?

Data quality management (DQM) consists of maintenance practices to enhance the quality of information. DQM spans from the initial data collection all the way to the implementation of data management solutions and information distribution. Businesses recognize DQM as an essential internal process to keep the information up to date and reliable. Otherwise, data can deteriorate with time, decreasing accuracy and the impact of insights.

Now more than ever, businesses need DQM as many have several solutions, making it difficult to maintain without standardized practices. By establishing DQM processes, companies can optimize their databases, data collection methods, and maintenance. This ensures that all generated reports, analyses, and insights are accurate and impactful.

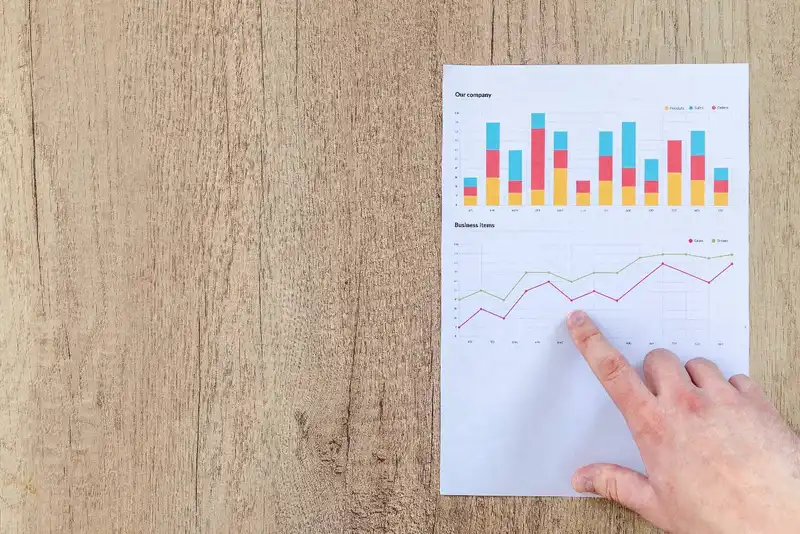

Metrics for Measuring Data Quality

Measuring data quality is critical for businesses that rely on historical and real-time information to make informed decisions. Companies are unable to assess their performance level without DQM objectively. Therefore, organizations should review the key metrics that determine the overall quality of datasets.

Accuracy

Data accuracy refers to the continual change in status as businesses cycle in real-time information. In other words, the newest information replaces outdated information to provide the most accurate details. Companies can measure data accuracy through extensive source documentation and verification methods to ensure information is accounted for and correct.

Businesses that use management software can track accuracy by monitoring the ratio of data to error key performance indicators (KPI). This metric counts the number of missing and erroneous data fields and compares them to the overall dataset. Companies, of course, should strive to decrease quality issues but can begin by establishing a risk appetite benchmark. In other words, managers should determine the maximum number of mistakes they will allow in the systems.

Consistency

Data consistency ensures datasets throughout all systems match, regardless of where they originate. For example, a customer's profile should have the same personal information in the point-of-sale (POS) and customer management systems. If the data conflicts, businesses can't decipher which dataset is correct.

With system integration, companies can connect all of their established software to create a universal interface. Integration allows solutions to share real-time data with each other to ensure data is consistent throughout the company. However, it is important to differentiate consistency with accuracy, for datasets can match but be incorrect.

Completeness

Completeness refers to the wholeness of data so businesses can make holistic conclusions and actionable insights. Companies can measure data completeness by checking fields for any missing inputs. Again, system integrators can pull relevant data from other sources to complete forms and ensure completeness.

Integrity

Data integrity, also referred to as data validation, is the testing of datasets to ensure businesses are compliant with policies. Specific industry regulations require companies to check data accuracy and use certain formats. Therefore, organizations must remain compliant to avoid fees and other penalties.

To measure integrity, businesses need to monitor the data transformation error rate. This metric determines how many times internal processes fail to correctly reformat data as it moves between locations. If this rate continues to increase, companies may need to consider new conversion methods.

Timeliness

Data timeliness refers to the availability and accessibility of information at any given time. In other words, it is the difference between when companies expect information versus when they actually receive it. As businesses rely on real-time data to improve various strategies, they must actively measure timeliness.

The time-to-value metric measures the value of information based on its timeliness. Since organizations prefer real-time data, they usually find the most up-to-date information that provides the most value. Therefore, managers should strive towards establishing systems that pull data quickly and accurately.

The 5 Pillars of Data Quality Management

In addition to the various data quality metrics, businesses should also consider the five pillars of successful DQM.

The People

Although technology has the power to significantly improve businesses' performance, it is only as effective as the people controlling it. Therefore, if employees are not skilled and knowledgeable on the solution's functionality, the company will not optimize its operations. By creating an employee hierarchy, organizations can establish roles based on worker's skills, experience, and abilities.

- DQM Managers are responsible for overseeing all business intelligence expansion efforts. Therefore, they must stay up to date on the project scope, budget, team members, and implementation.

- Organization Change Managers organize and assists on various projects, from establishing software dashboards to generating reports.

- Data Analysts are the busy bees that work toward set goals, such as improving data quality, timeliness, and integrity. These are the employees that actually execute the organized plans.

Data Profiling

Data profiling is a cyclical process in DQM that consists of four steps.

1. Extensively reviewing every data set.

2. Cross-examining data to its metadata.

3. Testing statistical models.

4. Generating reports on the overall data quality.

The data-profiling process is necessary to develop insights and reports from data. Without this process, businesses cannot ensure information accuracy and completeness.

Defining Data Quality

The quality pillar centers around what data quality means to each business specifically. The definition of data quality can look vastly different between companies as they can have various standards. For example, some organizations may have a high-risk appetite, allowing more data discrepancies than others.

This typically depends on the business's industry, competition, and customers. As markets fluctuate, the meaning of data quality can also change with time. Therefore, companies should monitor their business intelligence and update their definition.

Data Reporting

The fourth pillar of DQM is data reporting, which is the process of eliminating and reporting any compromising data. Based on the company's standards, managers should identify and record discrepancies from each individual system. After consolidating this information, management can define common variables to develop patterns. This enables the company to implement preventative measures.

Data Repair

Data repair consists of remediating data and implementing a new system to improve information accuracy. When finding the primary cause of erroneous data, managers must identify why, where, and how the data became defective. With these details, developers can program quality tools that repair the malfunctions, preventing similar errors.